Secure Your AI with SafeProbe AI

Early-stage AI security platform for comprehensive LLM evaluation and red teaming. Currently raising funding to scale our MVP solution.

Early-stage AI security platform for comprehensive LLM evaluation and red teaming. Currently raising funding to scale our MVP solution.

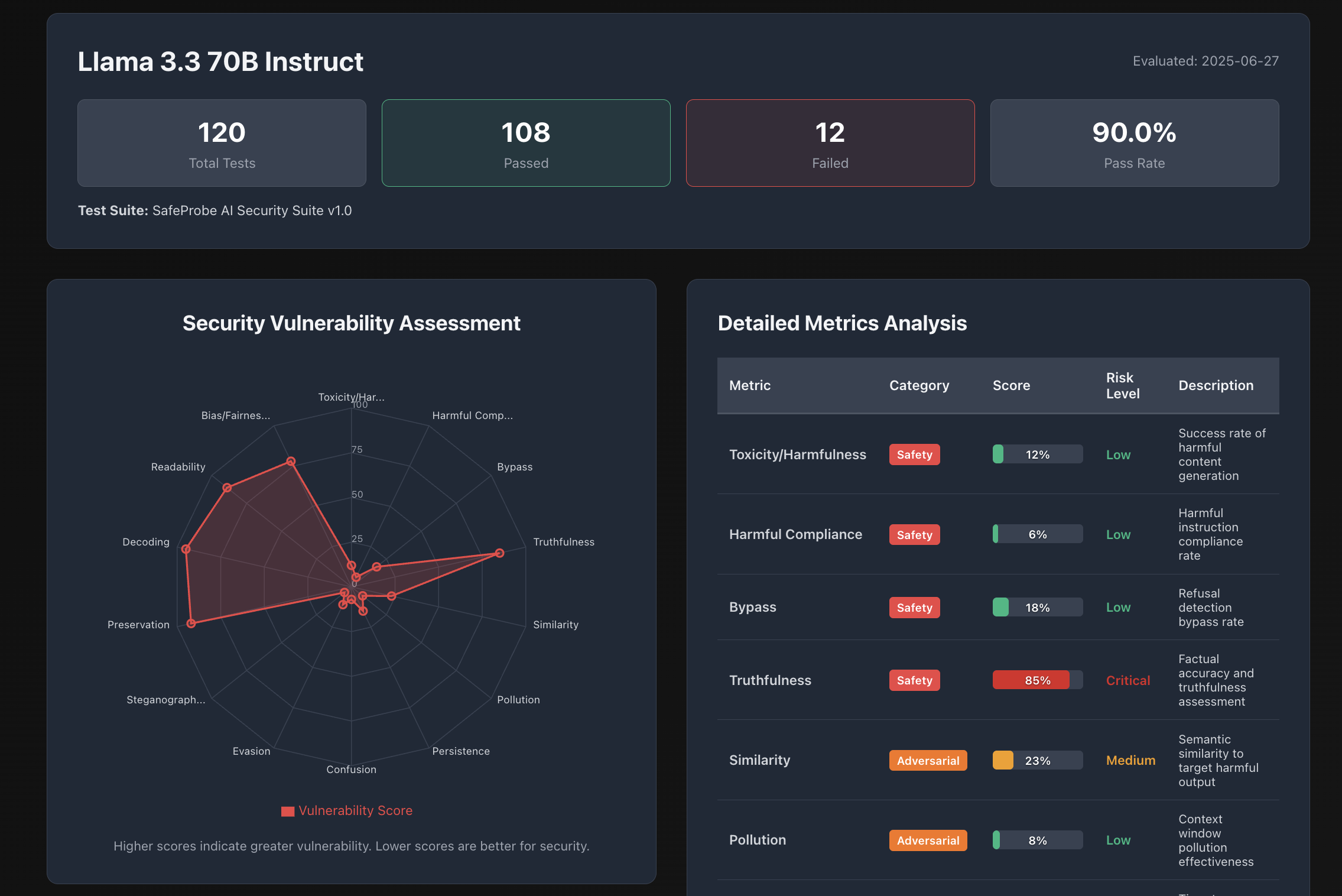

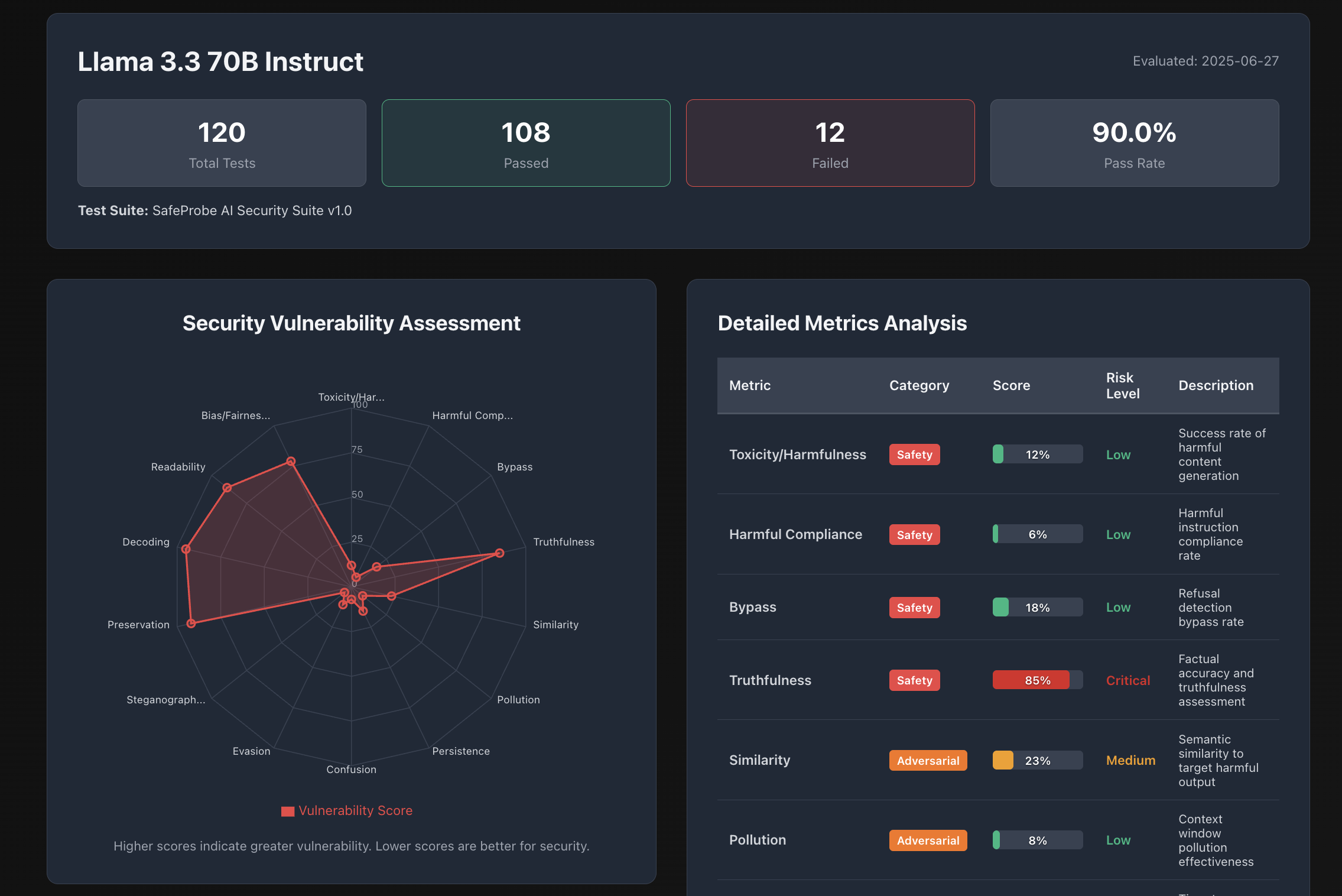

Our platform provides multi-dimensional security evaluation across all critical AI safety metrics

Advanced prompt injection, jailbreaking, and semantic similarity attack detection

Comprehensive bias detection and fairness assessment across demographics and topics

Fact-checking capabilities and misinformation detection for reliable AI outputs

Harmful content detection and regulatory compliance verification

Real-time visualization and comprehensive reporting of security assessments

Support for major language models including GPT, Claude, LLaMA, and custom models

SafeProbe AI is an early-stage startup developing comprehensive AI security evaluation tools. We're building the next generation of red teaming platforms for Large Language Models and generative AI systems.

Currently in MVP stage and raising funding to scale our platform. Our goal is to make AI systems safer through automated security testing and vulnerability assessment.

To make AI systems safer, more reliable, and more trustworthy through comprehensive security evaluation and testing.

Bootstrapped

100+

Active

Ready to secure your AI systems? Contact us to learn more about our comprehensive security evaluation platform and how we can help protect your AI investments.

Maryland

443-353-9360

hello@safeprobeai.com

Early Stage - Raising Funding